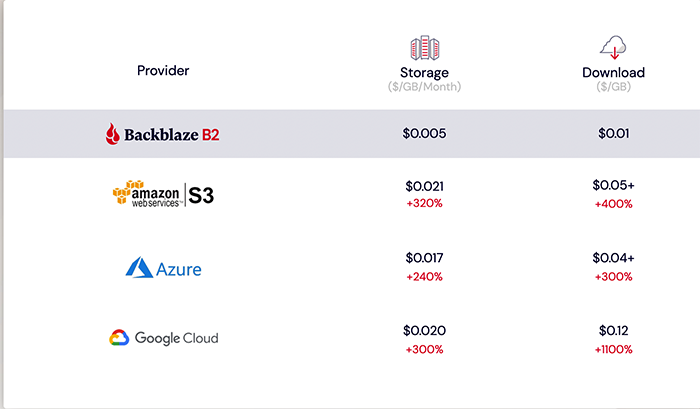

I’ve been a fan of BackBlaze’s B2 cloud storage service for a long time. The pricing is great and very straightforward: $.005 per GB per month, and $.01 per GB to download.

I’ve been a fan of BackBlaze’s B2 cloud storage service for a long time. The pricing is great and very straightforward: $.005 per GB per month, and $.01 per GB to download.

I use B2 for my cloud backups. I’m not thrilled about the $.01/GB download but such downloads are rare, and if I did need to get everything back, paying $10/TB would be the least of my worries. I have done restores in the 1-10GB range for small restores. I also use Dropbox for backups (see below) but don’t have enough space there, so my strategy is:

- Dropbox 2TB – what I use for active files = backups space to use first

- B2 = everything else that won’t fit

I’m using rclone which is a really nice solution for backups. Let’s walk through setting it up.

Step 1: Register with B2

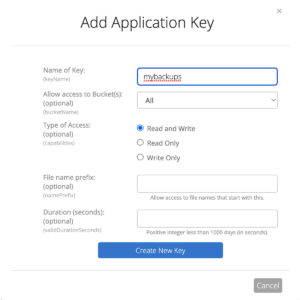

You’ll need to sign up for B2. Once you’ve created an account, head over to App Keys and create your Master Application Key, then click Add New Application Key. Give it any name you want and set it to Read and Write. Make sure to note the keyID and the applicationKey.

Step 2: Create a Bucket

Or many buckets. Buckets are where you’ll store your files.

Important Note: bucket names must be globally unique. I don’t mean just that you can’t have two buckets with the same name, I mean that your buckets can’t be named the same as any other user’s buckets. So forget calling your bucket “backups”.

There are two solutions:

- Use UUIDs for your bucket names.

- Use a UUID or similar prefix for your bucket names.

For example, if I want to backup my music collection, the bucket name “music” is assuredly already taken. But 541316cd-9333-49a2-aff9-f46d1fc731de is not.

You could use a separate UUID for each bucket, or you could pick one and use it as a prefix, so you’d have buckets named

- 541316cd-9333-49a2-aff9-f46d1fc731de-files

- 541316cd-9333-49a2-aff9-f46d1fc731de-music

- 541316cd-9333-49a2-aff9-f46d1fc731de-photos

etc. Bucket names can be up to 50 characters. A v4 UUID and a dash is 38 characters so that leaves 12 more to play with.

Step 3: Download rclone

On a Debian system, this is “apt install rclone” and you should find it in the package manager of whatever distro you’re using.

Step 4: Setup rclone

You’ll need to setup B2 for rclone. rclone supports an astonishing number of cloud storage services. In fact, there are 35 as of this writing – everything from Google Drive and One Drive to Pcloud, local disk, Hubic, 1Fichier, and your own SFTP or FTP accounts. Even SugarSync. Does anyone still use SugarSync?!?

# rclone config

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> n

name> b2backup

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph,

Digital Ocean, Dreamhost, IBM COS, Minio, Tencent COS, etc)

\ "s3"

5 / Backblaze B2

\ "b2"

Storage> 5

** See help for b2 backend at: https://rclone.org/b2/ **

Account ID or Application Key ID

Enter a string value. Press Enter for the default ("").

account>

At this point, enter your Application KeyID and then your Application Key.

account> 12345

Application Key

Enter a string value. Press Enter for the default ("").

key> 12345

Permanently delete files on remote removal, otherwise hide files.

Enter a boolean value (true or false). Press Enter for the default ("false").

hard_delete> true

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n> n

Remote config

--------------------

[test]

account = 12345

key = 12345

hard_delete = true

--------------------

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

b2backup b2

Select q for Quit Config. If you look at /root/.config/rclone/rclone.conf you’ll see a new configuration file has been created there. I’m doing this as root but you can run rclone as a non-privileged user as well.

Step 5: Backup!

Backing up is simple. Here’s the command I use:

/usr/bin/rclone –transfers 16 –bwlimit 07:00,1000k 23:00,0 sync /my/files 541316cd-9333-49a2-aff9-f46d1fc731de-files

Let’s break that down:

–transfers 16: I allow up to 16 files to be simultaneously uploaded. I find that I often have a lot of little files and having a lot of parallel transfers makes this go quickly

–bwlimit 07:00,1000k 23:00,0: This arcane command says “starting at 7am, using up to 1000KB/sec bandwidth. Starting at 11pm, you can use an unlimited amount of bandwidth.” In other words, don’t hog the line during the day, but at night you can use 100% of it

sync: This is the command, followed by the local directory and bucket name.

You should look through the B2 bucket settings and decide what retention you want. Sync will “sync” and create/delete/update, but if you have your bucket setup to retain old versions, B2 will retain those for you. Just be careful you don’t end up with a 20TB monster volume because you’ve set old version retention too generously.

Optional: Download the B2 CLI Tool

rclone by itself can do a lot of other things besides backup (check out “rclone ls” to list files in your bucket). But if you want to list buckets, create buckets, delete them, etc. you might find the B2 CLI tool helpful.

Go here and download it for your OS. Save it in the /tmp directory and then:

mv /tmp/b2-linux /usr/local/bin/b2 chmod 755 /usr/local/bin/b2

For example, to list your buckets:

b2 list-buckets | sort -k 2

I, too, am a fan of B2 + rclone!

FYI, if you like and rely on rclone, [you can sponsor the author on Github](https://github.com/ncw).

Does it work as an endpoint that delivers direct links? or is it the typical cloud that delivers strange URLs that no one downloads.