You may remember the scene in The Dark Knight where Batman turns every cell phone in Gotham into a sonar transmitter hand-wavey-something and then he can spy on thirty million people and map out the city with perfect real time analysis. It freaked Morgan Freeman out and if that fictional idea freaked you out as well, you’re going to love what the boffins at Carnegie Mellon have been up to.

You may remember the scene in The Dark Knight where Batman turns every cell phone in Gotham into a sonar transmitter hand-wavey-something and then he can spy on thirty million people and map out the city with perfect real time analysis. It freaked Morgan Freeman out and if that fictional idea freaked you out as well, you’re going to love what the boffins at Carnegie Mellon have been up to.

A paper published on arXiv is creating a stir. Here’s the summary:

Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation from RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by occlusion and lighting, which are common in many scenarios of interest. Radar and LiDAR technologies, on the other hand, need specialized hardware that is expensive and power-intensive. Furthermore, placing these sensors in non-public areas raises significant privacy concerns. To address these limitations, recent research has explored the use of WiFi antennas (1D sensors) for body segmentation and key-point body detection. This paper further expands on the use of the WiFi signal in combination with deep learning architectures, commonly used in computer vision, to estimate dense human pose correspondence. We developed a deep neural network that maps the phase and amplitude of WiFi signals to UV coordinates within 24 human regions. The results of the study reveal that our model can estimate the dense pose of multiple subjects, with comparable performance to image-based approaches, by utilizing WiFi signals as the only input. This paves the way for low-cost, broadly accessible, and privacy-preserving algorithms for human sensing.

In other words, Wi-Fi signals can be used to paint a 3D picture of you.

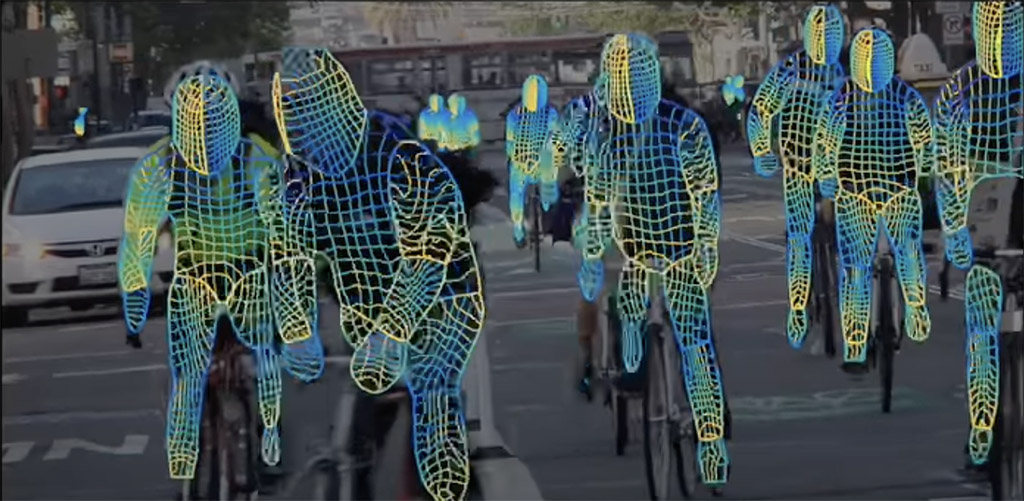

Dense Pose Estimation is “a fascinating project from Facebook AI Research that establishes dense correspondences from a 2D image to a 3D, surface-based representation of the human body.” Or if you’re more of a visual sort:

See this video for more demonstrations.

Facebook AI was using cameras to isolate and map human figures in public using no doubt top-of-the-line equipment for 2018.

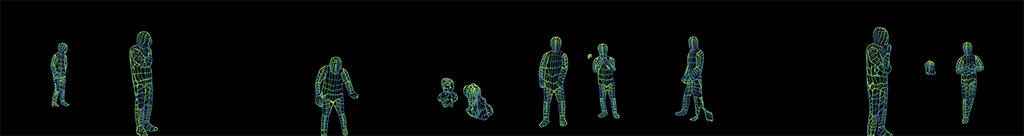

What this paper demonstrates is using two consumer-grade $49.99 TP-Link routers to detect and map the human figure in real time. And as they put it, “Illumination and occlusion have little effect on WiFi-based solutions used for interior monitoring.”

In other words: Wi-Fi signals go through walls.

Here’s what the CMU guys were able to see only using Wi-Fi signals:

If you live in a dense urban area, look at your phone. How many Wi-Fi signals are you picking up right now? In the near future, they could be scanning you and letting the broadcaster know when you stand up, sit down, leave your home, etc. Someone parked at the curb might be able to watch every human in your house in real-time.

Of course, there are practicalities than may limit this. On the other hand, this is what a few college researchers did with off-the-shelf hardware. Give it five years and…?

The authors talk somewhat unconvincingly about privacy. Sure, it’s better than a camera but…guess what’s better than neither? There are certainly applications for scanning/detecting humans in public spaces (security, airports, crowd control, smart city technologies, energy saving, etc.) but those are all places where a human has no reasonable expectation of privacy.

What if a creepy stalker lives in an apartment building?

The ramifications of this work will take a while to play out but it’s worth keeping an eye on.

Leave a Reply