We’re in an AI bubble, right? I can’t go to my YouTube app without a half dozen new vids popping up on that topic.

We’re in an AI bubble, right? I can’t go to my YouTube app without a half dozen new vids popping up on that topic.

Most of those commentators are talking about the economy and how once the AI bubble pops, it’s going to be 2001 or 2008 all over again. Maybe. If you want to hear some sharp analysis on that front, I refer you to one of my favorite channels, Wall Street Millennial:

- Nvidia Engages In Twitter War With Michael Burry

- Musk’s Lawsuit Against OpenAI Explained

- Musk’s xAI Burns $1 Billion Per Month

- OpenAI Invests In Fake Robot Company

- The Coming AI Datacenter Collapse

- Sam Altman Freaking Out As Gov Rejects Bailout Request

- OpenAI Losing Billions on AI Slop Videos

And all of those videos were released in the last month.

Economics-wise, it’s easy to see the problems. Nvidia lends money to companies that use the money to buy Nvidia GPUs. OpenAI loses money on every prompt but has $1.4 trillion in datacenter commitments. Oracle has committed an existential amount of capital to building out. Etcetera.

But What About the Tech Itself

Whether we’re in another 2001 or 2008 or 1929 is one question. No doubt, enthusiasm by tech companies is certainly frothy and once a huge sector of the economy (last time it was mortgages) starts being pumped up with money, there’s a risk.

But that’s economics. What about AI itself? Is that technology about to revolutionize the world?

I think there’s three views: the maximalist, the skeptic, and the Gartner analyst.

The Maximalist View

In this view, AI is the most important technology ever developed. We are on the cusp of ASI (artificial superhuman intelligence). We’ve already had AGI (artificial general intelligence), since ChatGPT is far smarter than your typical human. AI is shortly going to cure cancer, replace virtually all white collar jobs, and through robots, blue collar jobs as well.

A just machine to make big decisions

Programmed by fellas with compassion and vision

We’ll be clean when their work is done

We’ll be eternally free, yes, and eternally young, ooh— “I.G.Y.” by Donald Fagan

(That happens to be one of my favorite songs…but Fagan was writing from the perspective of someone in the 1950s looking forward to the promised future, not someone in 2025).

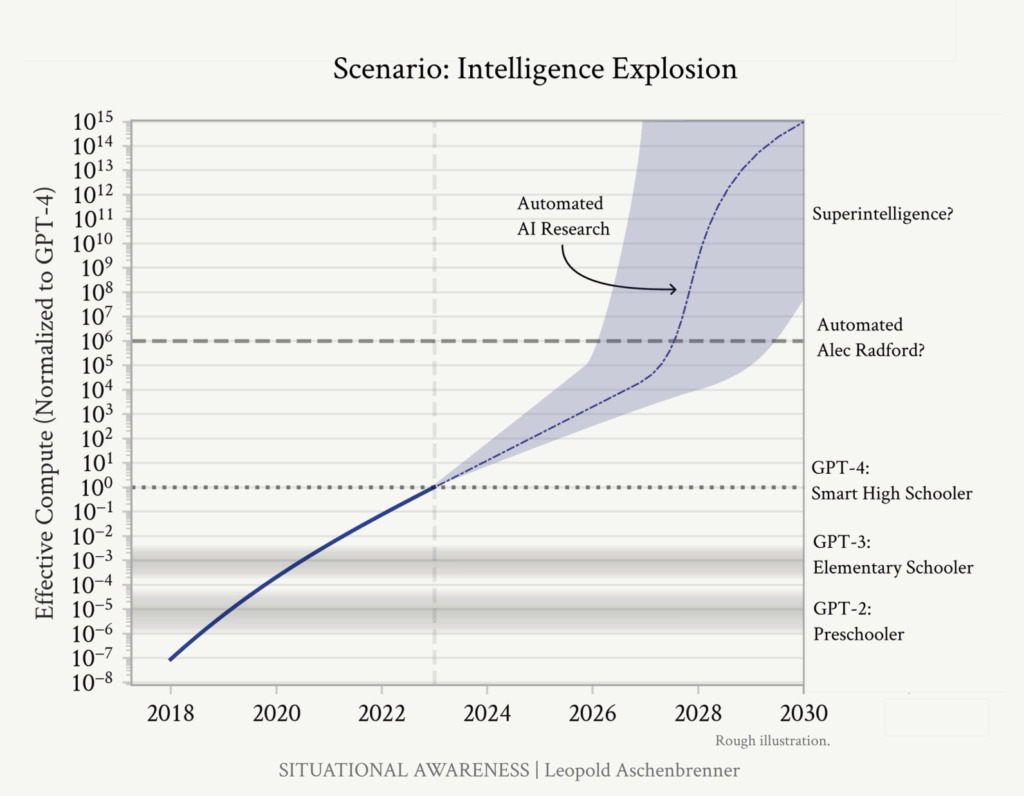

This optimistic view is best expressed by Leupold Aschenbrunner’s Situational Awareness (which is free) and can be summed up by this chart:

The argument goes like this:

- If you follow the trend, what AI can do has improved every year in a pretty linear line. That’s not all LLM tech, btw, but incorporates other machine learning technologies as well.

- Once AI becomes one tick smarter than the smartest human, there’s an intelligence explosion. The improvement rate line goes from a 45-degree slope to nearly straight up because AI can research on making itself smarter. Want a billion AI researchers? Just spin them up.

- And from that point, there is no limit on intelligence.

Aschenbrenner’s book is well worth a read, as he charts out not only the journey so far but also the practical steps to get to that point, as well as other practicalities (building a bajillion nuclear reactors for one thing).

The Skeptic

At the other end of the spectrum would be someone like Ed Zitron. I refer you to his excellent one-hour discussion with Newsweek in which he pours cold water on all of AI.

Zitron’s argument goes something like this:

- What exactly can you do with AI? You can make funny cat pics, and now funny cat videos. So what?

- AI makes creating easy code easier but it doesn’t make creating hard code easier.

- LLM can generate, but it can’t repeat the same thing twice. Its non-deterministic nature is its downfall.

- Everyone thinks “well, we’ve gotten this far with it, so in another year…” but Zitron says “prove it” and thinks LLMs as a tech are capped out. GPT-5 was a marginal improvement over GPT-4, for example.

- What jobs have really been outsourced to AI? Virtually none. Other than perhaps low-level translation and some ultra-routine customer service jobs (your company’s password reset bot), there has been no mass white collar layoff. There’s been a lot of companies justifying routine layoffs (from overstaffing during COVID) as AI-induced but there’s little evidence that AI is really replacing anyone.

- For every prompt, AI companies lose money. Eventually they will run out of venture capital, at which point the cost of running prompts will radically increase and without hard economic benefits, the LLM-powered “revolution” fizzles.

Zitron isn’t a technophobe by any means, and points out some cool things that machine learning has accomplished. Everything from Shazam to identify songs to being able to dictate voice memos to your phone to doctors running scans to identify strokes earlier are awesome. But the ChatGPTs, Anthropics, etc. are a nowhere path to economic incineration and LLMs are a dead end.

Another interesting take is from Professor Tim Dettmers, who wrote an article entitled “Why AGI Will Not Happen“. It’s not for the faint of heart, though if you just keep reading through it, you’ll get his main points even if all the detailed technical arguments are over your head (I speak from experience). His argument is that it takes exponential investments to make linear improvements, and he draws some interesting parallels. He feels GPUs will be tapped out in the next year or two, and that AI’s improvement curve will stop. The superintelligence explosion will never happen because of physical limits. It’s a good read.

The Gartner Analyst

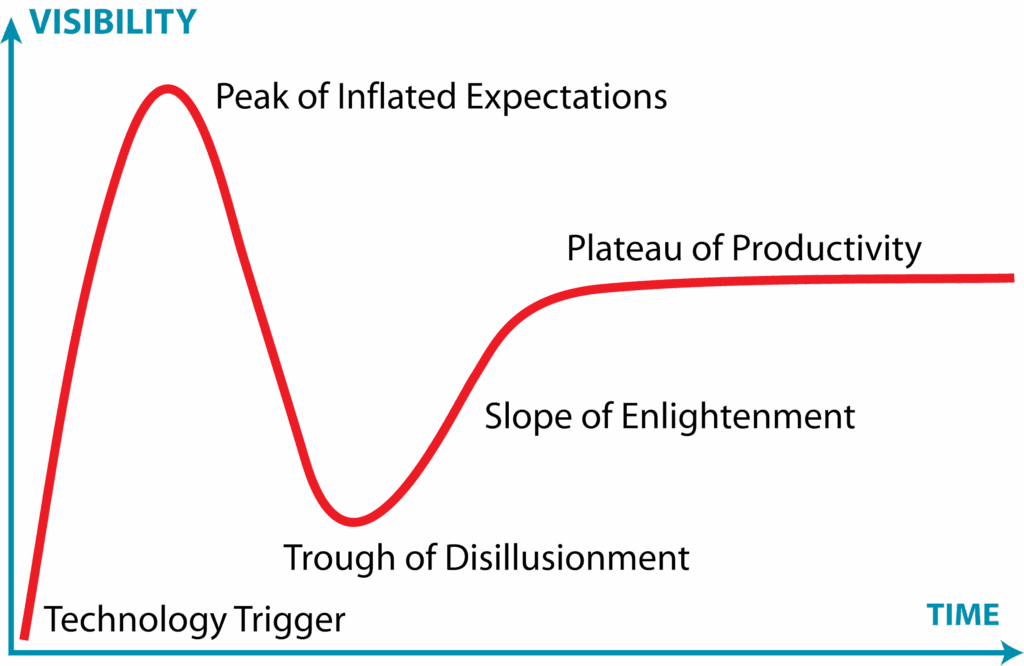

Gartner, an IT consulting firm, is famous for their “hype cycle,” which looks like this:

Or to simplify, new technology goes through three phases:

- Everyone thinks the new technology is going to change everything and is the greatest thing since sliced bread

- Everyone thinks the new technology was a bust and it was a waste of time

- Five to ten years later, everyone is using the technology

I’ve seen this play out many, many times in my IT career. To take only one of multiple examples, I remember when storage area networks were new and hot, and then the technology was less than perfect and early adopters got burned, so suddenly SANs were an overblown fad. A few years after that, no one had local storage in datacenters any more and SANs were de rigeur.

There are some products which don’t necessarily go through this cycle. Smart phones, for example, had a long history of failed ideas and then Apple got them right. Sometimes a halo product just shatters everything before it.

Is AI like that? There’s actually been so many hype cycles for AI it’s hard to say. The term “AI Winter” first appeared in 1984, and they were referring to the AI winter of the 1970s. So often, people have had science fiction ideas about AI’s potential, only to have reality crush them.

Is this time different? Has the technology and our infrastructure capabilities progressed to the point where this time things are really different? One thing that definitely different this time around is the amount of money being thrown around.

Or is this just another product? LLMs are out and maybe we’ll enter the trough soon, but then they win out over the long term? Maybe we’ll have a crash but engineers will continue to tinker and by 2030 or 2035, AI will quietly be everything.

Which view do you subscribe to: maximalist, skeptic, or product analyst? Let us know in the comments below!

Leave a Reply