Thanks to LowEndTalk member @symonp for sharing news of an EU plan to require messaging services to scan private message to find child sexual abuse material (CSAM).

Thanks to LowEndTalk member @symonp for sharing news of an EU plan to require messaging services to scan private message to find child sexual abuse material (CSAM).

The proposed rules state that hosting providers of “interpersonal communication services” (i.e., social media) must have technologies that allow them to effectively and reliably detect CSAM upon receiving a “detection order”.

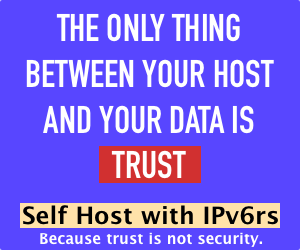

A “detection order” here is not a decree to scan all messages, but rather akin to a warrant: “we want to see all traffic from John Q. Smith”. However, since the provider has the ability, the privacy implications are the same regardless. If I send a message on social media, those companies will have the ability to read it. And of course, if an SM company can do that in the EU, they can do it anywhere.

There’s also a fair amount of new bureaucracy – in fact, a whole new agency to oversee things. All of this is justified in the name of stopping the spread of CSAM.

As I noted elsewhere, I’ve never viewed child pornography in my life and am repelled by its existence. However, I know that given 15 minutes I could find a vast quantity of it. And most of that time would be spent downloading the FreeNet client or finding dark web sites, private trackers, etc. So…if it’s so obvious and available to me…isn’t it to everyone? Of course it is. What is scanning people’s FB Messenger going to accomplish? By all means, if someone has an idea or better mousetrap of how to find, extinguish, and prosecute CSAM, I’m all for it, but the justification here is laughably thin.

Leave a Reply